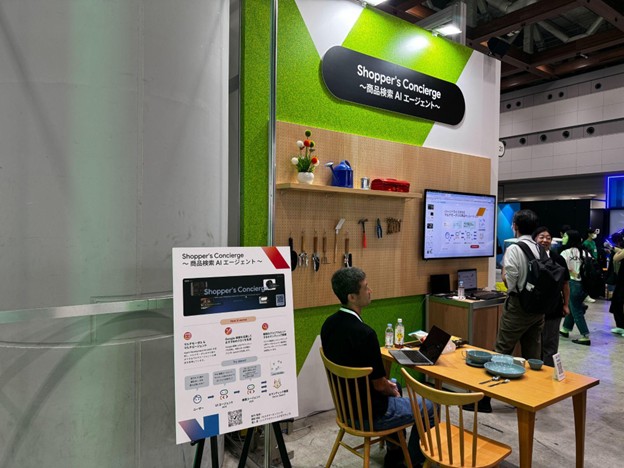

From 5–6 August 2025, I attended Google Cloud NEXT Tokyo ’25 at Tokyo Big Sight, set on reclaimed land in Tokyo Bay. Cross the Rainbow Bridge and you arrive in a place where a Statue of Liberty replica shares the skyline with the full-scale Unicorn Gundam, a place where past and future stand connected side by side.

This year, Google chose Tokyo Big Sight, Japan’s largest exhibition centre. It was no surprise that AI was the star of the show. The choice of such a grand venue sent a clear message: Google is connecting today’s world to an AI-powered future.

Unlike Google’s partner-exclusive conferences, this one was open to the public, drawing engineers, analysts, executives, AI-enthusiasts, creators, and curious visitors alike. It was a chance to explore the possibilities of AI first-hand and see how Google Cloud is weaving AI into its products and services.

Google Cloud NEXT is not just about hearing announcements, it is about feeling the momentum of where the industry is heading. The expo was divided into areas such as Data, Security, Application & Infrastructure, Shopping, Creation, and Maps. There was no area labelled “AI” because artificial intelligence is now woven into every Google product, in every area.

Anyway, there’re many take aways, and I’d like to share a few highlights for those who couldn’t attend:

1. AI in Data - Enriching your Data Quality

“Garbage in, garbage out” was a phrase I heard in almost every data-related session at the event. My company (Ayudante) is largely about ensuring that the data my clients collect is accurate, consistent, and ready for use, whether for retargeting campaigns, UX improvements, or other strategic purposes. So, I will focus more on the dataside.

As a data consultant, we cannot work with data that does not exist, nor can we easily fix inconsistent formats once they have been handled by multiple development teams. On Day 1, Yu Yamada, Customer Engineer at Google Japan, shared Google’s vision for BigQuery’s future, introducing the Data Governance Agent.

This feature aims to automate data governance tasks through:

In short, Google’s direction is clear: make data cleaner, better connected, and ready for decision-making without relying solely on manual intervention.

And then, if your product data is stored in BigQuery, Google is now making it easier to keep that data clean and usable. With Google Gemini and Automated Metadata Curation, BigQuery can scan your datasets and automatically generate clear descriptions for tables and columns, as well as suggest query tips.

In the not-so-distant future, the system can fill in missing details, standardise formats, and provide explanations you can review, edit, and copy directly into your schema documentation.

This does not only improve data quality, but also makes your datasets easier to understand and work with across teams.

Another experimental update gives us a glimpse into Google’s future direction for BigQuery. Using the new Data Quality capabilities, BigQuery, powered by advanced machine learning algorithms, will be able to automatically monitor tables and views for issues, then detect unusual data patterns before they turn into costly mistakes, fraud, or compliance breaches.

Even though this is still experimental, it is clear that Google is steering BigQuery towards being more proactive, intelligent, and hands-off in maintaining data reliability.

2. Google Maps × GCP Data Visualisation Products

Google Maps is becoming more integrated with GCP tools, allowing you to combine geospatial data with business metrics in BigQuery and visualise them through Looker. For example, you can overlay metrics like “stores with high footfall” directly onto a mapview, making it easy to identify prime locations or under performing areas.

This kind of integration brings spatial analytics to a new level of accessibility. It is no longer just about displaying data on a map, it is about linking location intelligence with AI-driven insights so you can make better, faster, and more confident business decisions.

3. AI in Retail, Redefining Shopping Experience - Google Shopper’s Concierge

I have recently taken up boxing. As a beginner, I wanted to buy a pair of affordable gloves (because sharing gloves at the gym is definitely not appealing). I asked ChatGPT for a recommendation and ended up with a pair for around ¥7,000, which was perfect for beginners.

That was when it hit me: AI chatbots are no longer just answering questions, they are quietly guiding us toward making purchases, whether intentionally or not.

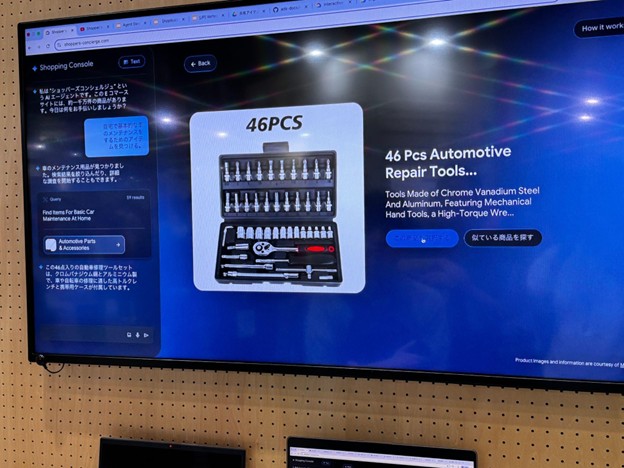

At Google Cloud NEXT, I saw the exact direction Google is heading with this idea: the Google Shopper’s Concierge, a product search AI engine. It connects to multiple e-commerce sites and, using vector search and multi-modal product curation, displays products that match the customer’s needs in just a few seconds. In other words, it is a far more efficient way of matching customers with businesses.

No lengthy explanation is needed here. We all know Google is the world’s largest advertising technology company. It is easy to see where this is going, and just how powerful it could be once it is fully deployed.

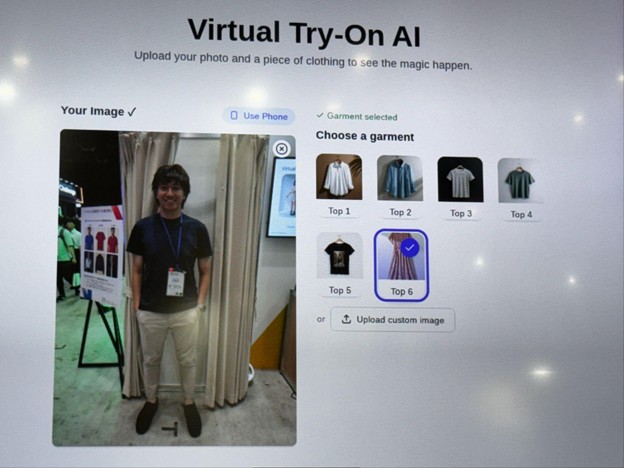

Shopping for clothes online can be frustrating. The product photo might look amazing, but you never really know if the fit or colour will suit you until it arrives, and by then, returns can be a hassle.

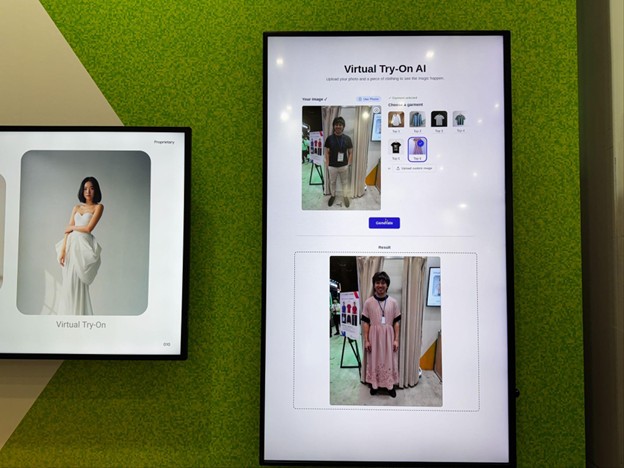

Virtual Try-On AI solves that by letting you upload a full-body photo and instantly see how you would look in the selected outfit.

Its potential goes far beyond online shopping. Even in physical stores, you could scan an item and try it virtually before stepping into a cramped fitting room. We all know store lighting can be unflattering, and sometimes an outfit that looks fine under those lights appears completely different once you step outside.

At the booth, I gave it a go. In the fitting room preview, the pink dress looked okay on me. But imagining myself walking through the mall or this expo in it? Let’s just say the look might raise more than a few eyebrows (or scream).

That’s exactly why this AI has value, it bridges the gap between the digital and real-world experience, helping you make a more confident choice before you buy.

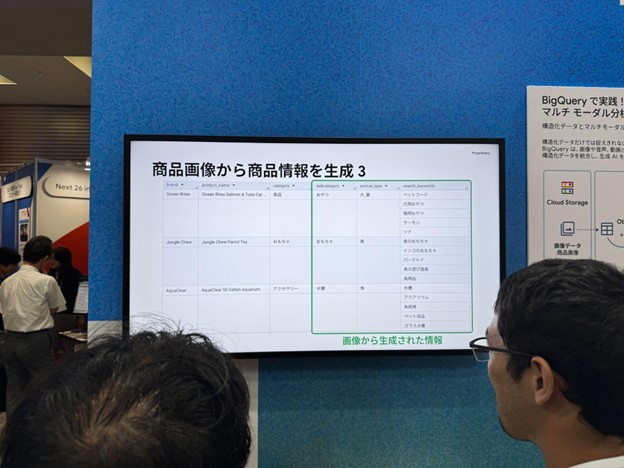

Google is taking the shopping experience a step further with AI that can enhance product discovery. By simply uploading a product image, Gemini can automatically analyse it and generate additional category tags.

This means that even if your product listing is missing certain details, AI can enrich it with metadata such as category, subcategory, target audience, or relevant search keywords.

For example, a photo of a cat scratching post could be instantly tagged under “pet accessories”, “cat furniture”, and “indoor play”, making it much easier for shoppers to find.

In the future, using Gemini, customers will discover relevant products faster, and sellers will benefit from improved visibility without manually tagging every item. AI is making the connection between products and potential buyers smarter and more seamless.

4. What Else Was Inside the Expo

Beyond the main highlights, the expo featured dedicated zones on security, applications, infrastructure, and creation, as well as booths from numerous Google Certified Cloud Partners, demonstrating success stories and products designed to help clients achieve their business goals.

Without a doubt, this was the most impressive cloud technology expo I have ever attended.

And after each 30-minute sharing session,speakers moved to the “Ask the Expert” area, giving attendees the chance todive deeper, ask follow-up questions, and gain insights that went beyond the scheduled presentations.

A personal highlight was visiting the GCP Certified Engineer Lounge, reserved for professionals with official Google Cloud certifications. It was a quiet spot to recharge, but more importantly, it offered a chance to connect with peers who had faced similar technical challenges or to explore new business opportunities together.

Having earned my Associate Cloud Engineer and Data Engineer certifications last year, I was happy to qualify. And with there freshments and comfortable space Google provided, it turned out to be the perfect spot to wrap up this review.

Over the past few years, I have attended many technology events both inside and outside Japan, and everyone seems to be asking the same question. Before sharing my own feedback, I would like to begin with a quote I heard in one of the sessions:

“AI is here to maximise human potential, not just for efficiency or reporting.”

My interpretation of this phrase is that before asking AI to help you complete a task, you need to have enough understanding of the task and its professional area so you can give a better prompt and also ensure the output matches industry standards.

ChatGPT 3.5 was released in 2023, and it was revolutionary. Now it is 2025, and there are still many people who do not understand how to use AI correctly, or who blindly trust all information provided by AI. Worse still, they adopt AI-generated output (like code and reports) without 100% understanding what it is.

Those people will be replaced not only by AI, but also by a better person who can master AI with industry expertise……or simply by another person who is better than they are.

Use AI wisely, and AI will release all of your potential and creative ideas.

For me, the ability to use AI is becoming as basic as using office tools. I guess no one asks in a job interview whether you can use Excel or PowerPoint because it is assumed.

These days, everyone is talking about “AI blah blah blah”, but AI is not just chatbots. It is about thoughtful application across a wide range of areas.

It has only been two years since ChatGPT 3.5, but already many things have changed. I can’t wait for next year with AI, and the year after that as well.

In closing, thank you very much for taking the time to read this article.